GraphRAG vs. Vanilla RAG: Structure, Signal, and the End of Hallucinations

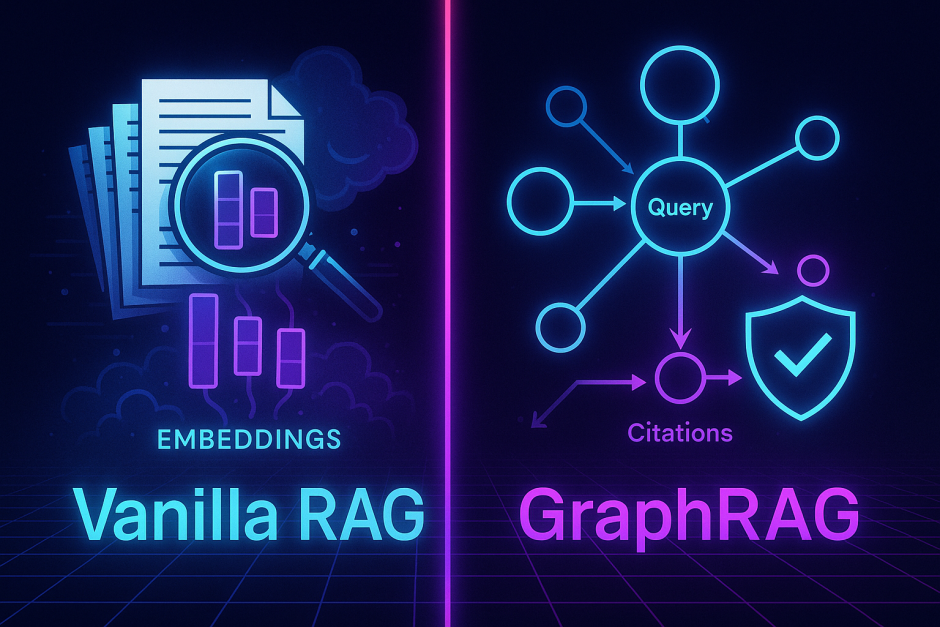

TL;DR: Vanilla RAG (vector search → stuff context → generate) is great for “find and summarize.” It breaks on multi-hop questions, entity-heavy domains, and anything that needs joins, constraints, or causality. GraphRAG adds an explicit knowledge graph—entities, relationships, events—so the model retrieves structured facts first, then uses text passages as evidence. The result: higher factuality, controllable reasoning, and answers you can audit.

1) Quick refresher: what Vanilla RAG solves—and where it fails

Vanilla RAG pipeline

- Chunk documents → embed chunks

- Query → embed → k-NN retrieve top-k chunks

- LLM reads chunks and drafts answer (+ citations)

Common failure modes

- Lost structure: “Is Alice Bob’s manager’s manager?” requires relational hops, not keyword similarity.

- Context collision: top-k includes near-duplicates or contradictory snippets; the LLM “averages” them.

- Semantic drift: embeddings pull in thematically similar but factually irrelevant text (high recall, low precision).

- No constraints: “only EU orders last 30 days” is temporal + geo filtering—hard to enforce with plain vectors.

2) What is GraphRAG?

GraphRAG augments RAG with a knowledge graph (KG): nodes (entities/events) + edges (relationships). Retrieval becomes two-stage:

- Structured retrieval: Use the KG to answer who/what/when/where/how-related via graph queries (e.g., Cypher/Gremlin/SQL-graph).

- Evidence expansion: For the nodes/edges returned, fetch the most relevant passages (vectors/BM25) for citations and wording.

- Compose: LLM assembles a grounded answer using the graph facts as the spine and passages as evidence.

Why it works

- Graphs preserve relationships, constraints, and time.

- You get multi-hop reasoning for free (“customer → orders → returns → root cause”).

- The LLM becomes a narrator, not a database.

3) When GraphRAG decisively beats Vanilla RAG

- Entity-dense domains: customers, SKUs, suppliers, contracts, policies.

- Multi-hop questions: “Which campaigns drove first-order profit for customers who later churned?”

- Constraint queries: time windows, geo/regulatory filters, role-based scopes.

- Why/how questions requiring event chains: incident → mitigation → outcome.

- De-duplication & canonical truth: unify aliases (e.g., RP, Report Pundit, ReportPundit).

4) Architecture blueprint

Ingestion

- NER & linking: extract entities (people, SKUs, tickets, policies), link to canonical IDs.

- Relation/event extraction: “Order123 → returned_due_to → ‘wrong size’ (2025-07-10)”.

- Dedup & merge: consolidate variants, attach provenance (doc, line, timestamp).

Storage

- Graph DB (Neo4j/Neptune/Arango/etc.) for facts

- Vector index for passages (+ BM25 for sparse hits)

Query orchestration

- Intent classifier → graph mode vs. text mode

- If graph mode: run graph query → get nodes/edges

- Expand neighborhood to collect supportive docs

- Rank with hybrid (dense + sparse + recency)

- Generate with grounding schema (facts table + citations)

Observability

- Store traces: graph query, node set, evidence, final answer

- Metrics: multi-hop precision, grounding rate, contradicting-evidence flags

5) Data model starter kit (ecommerce-flavored)

Entities (nodes): Customer, Order, LineItem, SKU, Ticket, Campaign, Policy, Region, Supplier

Relationships (edges):

Customer-PLACED->Order (t)Order-HAS_ITEM->LineItem→LineItem-OF->SKUOrder-RETURNED_FOR->Reason (t)Ticket-RELATES_TO->Order/SKUCampaign-ATTRIBUTED->Order (model, confidence)SKU-SUPPLIED_BY->SupplierOrder-IN_REGION->Region

Properties: timestamps, numeric values (price, margin, return_rate), booleans (is_promo), lists (tags), and provenance (doc_id, URL, paragraph_id).

6) Example: complex question decompose

Question

“Which SKUs had the highest refund rate in the EU in the last 30 days, and what root causes appear most often in support tickets? Provide evidence.”

Plan

- Graph query → eligible orders in EU, 30-day window → returns by SKU

- Aggregate refund rate by SKU, pick top 5

- Traverse to related tickets → cluster root causes

- Pull supporting passages (policy, common ticket notes)

- Generate ranked list with citations

Cypher sketch

MATCH (o:Order)-[:IN_REGION]->(:Region {code:"EU"})

WHERE o.date >= date() - duration('P30D')

MATCH (o)-[:HAS_ITEM]->(li:LineItem)-[:OF]->(s:SKU)

OPTIONAL MATCH (o)-[:RETURNED_FOR]->(r:Reason)

WITH s, count(o) AS orders, count(r) AS returns

WITH s, returns * 1.0 / orders AS refund_rate

ORDER BY refund_rate DESC LIMIT 5

MATCH (t:Ticket)-[:RELATES_TO]->(s)

WITH s, refund_rate, collect(t.summary)[0..200] AS ticket_summaries

RETURN s.sku_id AS sku, refund_rate, ticket_summaries

Evidence expansion

- For each

sku, fetch top passages mentioning return reasons/policies via vector + BM25 constrained tosku_idand last 90 days.

Answer composition guardrails

- Output table (SKU, Refund Rate, Top Causes, Citations)

- Disallow claims without ≥2 supporting passages

- Include confidence based on coverage of orders vs. tickets

7) Prompts that keep answers grounded

System:

“You are a factual analyst. Use ONLY the FACTS table to make claims. Use EVIDENCE passages for wording and quotes. If a claim is not in FACTS, say ‘insufficient data.’ Return a table + bullet summary. Always include citations.”

Inputs to the model

FACTS: compact JSON from graph query (aggregates, IDs)EVIDENCE: 5–12 short passages (source, snippet, timestamp)POLICY: redaction rules (PII), allowed scopes (region, date)

8) Evaluation: what to measure (beyond BLEU)

- Grounding rate: % sentences backed by a fact or evidence passage

- Contradiction rate: % outputs contradicted by retrieved evidence

- Multi-hop precision@k: correctness on 2+ hop queries

- Coverage: % of relevant entities touched in the graph result

- Temporal accuracy: correct application of date filters

- Edit distance / CSR time saved: human edits to publish

- Answer reproducibility: same

FACTS→ same conclusion

Create a golden set of 100–300 questions with canonical answers + graph queries + acceptable evidence ranges.

9) Cost & latency playbook

- Cache neighborhoods: precompute high-demand subgraphs (top SKUs, VIP customers).

- Two-tier retrieval: fast graph filter → lazy evidence fetch.

- Vector budget: cap to 6–10 passages; prefer shorter, denser chunks.

- Pre-summaries: store nightly rollups (e.g., refund leaders) for sub-100ms answers.

- SLM default: use a small model to compose grounded answers; escalate to LLM only for ambiguous or sparse evidence cases.

10) 30-60-90 migration plan

Days 0–30 — MVP GraphRAG

- Pick 1–2 high-value question families (refunds, compliance, SLA breaches).

- Design a minimal ontology (entities/edges/properties).

- Ingest 3–5 core sources; build entity linker; attach provenance.

- Wire graph → evidence → grounded generation for one report.

Days 31–60 — Hardening

- Add temporal & geo constraints; implement RBAC scopes.

- Build dashboards: grounding rate, contradiction rate, latency, cost.

- Introduce critic model to veto ungrounded claims.

Days 61–90 — Scale & catalog

- Expand ontology (campaigns, suppliers, incidents).

- Add job to refresh graph nightly; backfill historical events.

- Create an agent entrypoint (“Why did returns spike for SKU-X?”) that uses GraphRAG under the hood.

- Templatize queries and prompts; publish an internal GraphRAG cookbook.

11) Pitfalls & anti-patterns

- Ontology bloat: start lean; add edges only when a real question needs them.

- No provenance: every node/edge must carry source + timestamp—non-negotiable.

- Over-chunking docs: too many near-duplicates overwhelm ranking.

- Letting the LLM “infer” facts: the graph is the source of truth; the LLM narrates.

- Ignoring freshness: stale graphs destroy trust; schedule updates and mark recency in answers.

12) Quick decision scorecard

| Situation | Vanilla RAG | GraphRAG |

|---|---|---|

| Single-hop Q&A / FAQ | ✅ | – |

| Policy lookup with simple filters | ✅ | – |

| Multi-hop joins (who→what→why) | – | ✅ |

| Strict time/region constraints | ⚠️ | ✅ |

| Entity resolution & dedupe | – | ✅ |

| Auditability & provenance | ⚠️ | ✅ |

(✅ = strong fit, ⚠️ = workable with care, – = not ideal)

The bottom line

If your questions look like joins with rules, you’ve outgrown Vanilla RAG. Adopt GraphRAG: let the graph deliver structured truth, let vectors bring the right words, and let the model compose with receipts. You’ll cut hallucinations, speed up expert workflows, and—most importantly—ship answers your auditors (and customers) can trust.

Starter checklist

- Define a minimal ontology tied to 2–3 real questions

- Build NER + entity linking with canonical IDs & provenance

- Stand up a graph store + vector index; wire a two-stage retriever

- Add grounding-aware prompts + a critic to block unsupported claims

- Track grounding, contradiction, and temporal accuracy on a golden set